Student and Faculty Research Projects

Graduate Programs in Software faculty members and graduate students collaborate on joint research projects using data science.

Recent Presentations:

S. K. Joshi, M. Rege, O. Dubey, P. Dwivedi, Hemachandran K, and R. V. Rodriguez, “Multi-Agent Consensus of Wheeled Mobile Robots via Beyond-Pairwise Interaction Frameworks”, in Proceedings of the 11th International Conference on Control, Decision and Information Technologies (CoDIT), July 15-18, 2025, Split, Croatia.

Abstract: In this study, we address the consensus formation problem in multi-agent systems, specifically focusing on wheeled mobile robots (WMRs). The paper proposes a hybrid consensus framework that integrates both pairwise and higher-order interactions among robots, aiming to model real-world scenarios involving dense formations and multi agent group coordination. The framework accounts for non-holonomic constraints of WMRs and incorporates a non-smooth Lyapunov function for stability analysis. By extending classical consensus models with group-level feedback and proving stability using the upper Dini derivative, the proposed solution ensures robust consensus even in the presence of contrarian agents and dynamic environments. The simulation results demonstrate the effectiveness of the proposed model in achieving synchronization and convergence across a network of WMRs.

P. Gajbhiye, M. Rege, S. Wanjari, V. Singh, and Hemachandran K, “XGBoost-based Emotion Classification in Healthcare using Electroencephalogram Signals”, in Proceedings of the IEEE Conference on Artificial Intelligence, AI Standards and QA for Healthcare and Life Science track, May 5-7, 2025, Santa Clara, CA, USA.

Abstract: Over the past few years, significant research has been done on the brain signals employed to detect emotions. Researchers have implemented machine-learning techniques to identify emotions as psychological variables, but attaining exact results remains challenging. This paper aims to improve the ability to accurately classify an individual’s affective states using electroencephalogram (EEG) signals. The process analyzes the power spectral density between brain rhythms to extract significant features from EEG signals. Max-depth and n-estimator selection are utilised to improve the feature extraction process. Then, we utilise the Extreme Gradient Boosting (XGBoost) to classify the data. The Gradient boosting framework is the basis of XGBoost, an ensemble of linear model solutions with tree-based learning methods to improve predictive accuracy. The proposed approach was evaluated against various classification techniques on the DEAP dataset. These techniques included feedforward neural networks (FNN), AdaBoost, K-Nearest Neighbours (KNN), and Random Forest. The emotions that were modeled were dominance, arousal, liking, and valence. The proposed method was more precise than other techniques in classifying emotions: 86.39% for liking, 83.60% for arousal, 84.09% for dominance, and 84.87% for valence. The results indicate that the proposed method performs well at enhancing the precision of mood classification and has the potential to be applied in affective computing and brain-computer interface (BCI) systems.

S. K. Joshi, O. Dubey, P. Dwivedi, Hemachandran K, R. V. Rodriguez, and M. Rege, “LIME-Based Explainable AI Approach for Battery Health Monitoring”, Proceedings of the IEEE Conference on Artificial Intelligence, AI Standards and QA for Smart Grids and Clean Energy track, May 5-7, 2025, Santa Clara, CA, USA.

Abstract: It is crucial to monitor battery health to ensure the reliability and longevity of energy storage systems. This study presents an Explainable AI (XAI) powered monitoring framework that combines machine learning with improved interpretability. The NASA battery health dataset was used to train two predictive models, Random Forest and XGBoost, using derived voltage, charge voltage, measured current, charge current, temperature, and battery usage time as the key process indicators. The Random Forest model achieved an accuracy of 96 %, while XGBoost attained 98 %. The method also integrated the Local Interpretable Model-Agnostic Explanations (LIME) framework to add transparency to its decisions, further illustrating the importance of features. Real-life use cases were examined to determine how well the system performs in battery condition monitoring. The proposed AI-powered monitor provides trustworthy predictions with understandable insight into better predictive maintenance and fault detection. The enhanced explainable approach of the proposed work increases battery management systems’ utility by addressing crucial aspects of electric vehicles and renewable energy storage systems.

Abstract: Generative artificial intelligence and large language models (LLM) are gaining focus in a number of industries, including the field of human resources (HR), and are attracting new attention with the release of OpenAI’s ChatGPT in late 2022. This study focuses on generative AI output in the con-text of ethical AI and algorithmic hiring practices by exploring differences in ChatGPT’s responses to resume writing prompts based on racial and ethnic categories, using a variety of methods to identify and evaluate those differences to assess bias. We find that there are observable qualitative differences in some of the output produced by ChatGPT in response to prompts for different races and ethnicities. These differences reflect potentially negative bias and may promote racial, ethnic, and cultural stereotypes. As such, it is critical for data scientists and HR practitioners to use a variety of methods to rigorously assess the presence of bias and the potential for harm when considering the use of synthetic data in algorithmic hiring practices and other applications. This study addresses ethical concerns regarding the use of ChatGPT to create synthetic text data to train other AI models due to its potential to perpetuate racial and ethnic bias.

Abstract: Navigating the dynamic hospitality landscape, luxury hotels in India face a constant challenge: understanding the nuanced factors driving guest satisfaction. Existing research offers broad insights, but often neglects the intricacies of the luxury segment. This study bridges this gap, meticulously analyzing reviews from over a hundred 5-star-rated hotels in major metro cities in India to identify seven key attributes influencing reviewer perceptions. The findings reveal distinct patterns, with service, staff, and food quality emerging as powerful predictors of both positive and negative sentiments. Notably, cleanliness and comfort exhibit unique impacts, further enriching our understanding of guest expectations. By quantifying the influence of each attribute, this research provides hotel management with actionable insights to tailor offerings and elevate guest experiences, contributing significantly to the ongoing discourse on customer satisfaction in the hospitality sector.

Abstract: This research delves into understanding the experiences of final-year engineering undergraduates through their project work feedback, utilizing Latent Dirichlet Allocation (LDA) and PyLDAvis visualization. The study dissects responses to ten carefully selected questions, revealing central themes: the evolution of collaboration, the alignment of projects with core values like innovation and sustainability, and the development of technical skills such as design and troubleshooting. These insights offer valuable guidance for educators to adapt teaching and curriculum design to student experiences and industry trends. The study highlights the efficacy of LDA and PyLDAvis in capturing and visualizing the nuanced perspectives of engineering students, paving the way for enhanced and responsive engineering education.

S. M. Ganie, Hemachandran K, and M. Rege, “Transfer Learning for Potato Leaf Disease Detection” in Proceedings of the 18th Research Challenges in Information Science, May 14-17, 2024, Guimarães, Portugal.

Abstract: Deep learning techniques have demonstrated significant potential in the agriculture sector to increase productivity, sustainability, and efficacy for farming practices. Potato is one of the world's primary staple foods, ranking as the fourth most consumed globally. Detecting potato leaf diseases in their early stages poses a challenge due to the diversity among crop species, variations in symptoms of crop diseases, and the influence of environmental factors. In this study, we implemented five transfer learning models including VGG16, Xception, DenseNet201, EfficientNetB0, and MobileNetV2 for a 3-class potato leaf classification and detection using a publicly available potato leaf disease dataset. Image preprocessing, data augmentation, and hyperparameter tuning are employed to improve the efficacy of the proposed model. The experimental evaluation shows that VGG16 gives the highest accuracy of 94.67%, precision of 95.00%, recall of 94.67%, and F1 Score of 94.66%. Our proposed novel model produced better results in comparison to similar studies and can be used in the agriculture industry for better decision-making for early detection and prediction of plant leaf diseases.

G. V. Shobika, S. P. Siddharth, Vishwa KD, Hemachandran K. and M. Rege, “ANN Model for Analytics”, in Artificial Intelligence and Knowledge Processing: Improved Decision Making and Prediction, CRC Press, Taylor & Francis, 2024.

Abstract: A neural network is a data encoding and decoding system made up of a large number of simple processing components that are highly coupled and arranged in a pattern inspired by the cerebral cortex section of the brain. As a result, neural networks can be utilized to tackle issues that traditional computers cannot. Neural networks have emerged as a promising area for research, development, and application to a wide range of real-world circumstances. Indeed, neural networks have qualities and capacities that no other technology has. Artificial neural networks (ANNs) are functional replicas of biological neurons with the purpose of building usable computers for real-world problems. The use of these ANNs has grown substantially in recent years, driven by both theoretical and practical implementations in a variety of fields. A basic theory of ANN is presented, as well as promising topics for research and future trends.

S. Darly, D. Kadhiravan, Hemachandran K, and M. Rege, “Simulation Strategies for Analyzing of Data”, in Handbook of Artificial Intelligence and Wearables, CRC Press, Taylor and Francis, 2023.

Abstract: This chapter explores simulation strategies as a potent alternative for data analysis that can be used across multiple domains. We delve into the diverse applications of simulations in various domains, highlighting their effectiveness in generating insights, making predictions, and informing decision-making processes. Through a comprehensive review of simulation methodologies, we shed light on data sources, data formats, data processing, and their versatility and adaptability, showcasing their potential to address complex data-related challenges. Furthermore, we discuss the problem statement and provide the solution using the Monte Carlo simulation approach with the ability to handle non-AI-ready datasets, minimize bias, and facilitate scenario testing. This research serves as a valuable resource for practitioners seeking innovative and AI-independent approaches to analyzing data in today's data-driven landscape.

Social media, due to its deep use throughout the United States, has the potential to supply accurate opinion polling. This study aims to replicate job approval polls conducted by professional pollsters through the utilization of sentiment analysis. A Kaggle dataset of Twitter messages from the end of the 2020 United States Election was selected and prepared. The sentiment of each tweet within this dataset was classified by language models created by cloud providers and accessed through APIs. We used two evaluation methods: hypothesis testing and confusion matrix accuracy. Regarding the hypothesis testing, the sentiment classification proportions were evaluated against job approval data aggregated from professional pollsters and did not perform well enough to accept the null hypothesis of no independence. Regarding the confusion matrix accuracy, the classifiers were evaluated against two sets of manually labeled tweets: general sentiment and political sentiment. The classifiers performed reasonably with general sentiment but poorly for political sentiment.

Lung cancer is among the top deadly diseases, affecting human beings globally. Therefore, it is crucial to predict and detect this disease as early as possible, allowing the doctors and the patients to take the appropriate and essential actions. Techniques from machine learning can be applied for this. In this study, we used machine learning for predicting cancer in the lungs. We explored five machine learning algorithms, viz., Decision Tree (DT), Support vector machine (SVM), Naive Bayes (NB), Logistic Regression (LR), and Random Forest (RF). Utilizing a publicly available dataset that contains demographic information of 284 patients with 16 parameters. We performed an extensive explorative analysis of the dataset. Among five algorithms, Logistic Regression (LR) exhibited best findings in terms of f1-score, recall, specificity, accuracy, and precision also negative predicted values (NPV). Compared to similar research works the proposed model achieved a better prediction result. The proposed model can be used to other illnesses that have similar symptoms by using transfer learning.

Anomaly detection using audio signals from industrial machines in the manufacturing industry has gained broad interest over the last few years. For example, predictive maintenance solutions utilize raw analog signals to identify trends and patterns. In a few scenarios, an engineer working in a factory setting can tell when a machine is behaving abnormally just by hearing unexpected sounds (e.g., the loudness of sound) that are well within the human perceivable frequency range (20Hz - lowest pitch to 20 kHz - highest pitch) which are typically concentrated in a narrow range of frequencies and amplitudes. The human perception of the amplitude of a sound is its loudness. However, the audio signal in its raw form is not always the best representation of the important features (e.g., frequencies, amplitude, peaks). Additionally, the machine learning applications which rely on using traditional digital signal processing techniques (e.g., digital signal processors, chips) have a lot of dependency on subject matter experts to tune the system for a better performance. Thus, we investigate how the digital transformation of waveform signals from microphone sensors (e.g., Audio recordings of industrial pumps, valves, slide rails) into Spectrograms can help to monitor machine health (e.g., anomaly classification). In the pre-processing phase, raw audio signals (.WAV format) from each machine are converted to Mel Spectrogram images using short-term Fourier transformation. Then, comparative study of image classification techniques using deep convolutional neural networks (CNN) with and without data augmentation, is conducted to classify images as normal or abnormal. The approach is evaluated using Malfunctioning industrial machine investigation and inspection dataset (MIMII dataset). Results show that the neural network based models trained on the dataset with Mel-Spectrogram transformation perform better than models trained on the raw dataset (i.e., sound samples without spectrogram conversion).

Secondhand Hounds (SHH) is a non-profit animal rescue organization located in Minnetonka, Minnesota and was facing a rise in pet surrenders. A pet surrender is when an owner relinquishes ownership of the pet to a shelter or rescue. On top of this, the processing of surrender applications was a manual and extremely tedious process. The aim of this project was two-fold: create an automated data pipeline and a reporting dashboard for key insights. This would enable data-driven decision making for SHH to hopefully mitigate these surrenders in the future. As part of this project, we collaborated with the team at Secondhand Hounds to automate the processing of surrender applications and storing of records, created a centralized NoSQL database for surrender data and built a business intelligence dashboard for data analysis.

https://iacis.org/iis/2022/2_iis_2022_242-254.pdf

Currently across the United States the mortgage market is booming, creating a flood of available mortgages on the secondary market. With the influx of available mortgages for investors to purchase the space has become highly competitive and companies are looking for competitive advantages with limited time and resources to invest in the mortgages. The purpose of this study is to show how the use of machine learning and neural networks can help predict mortgages that will have 30+ day late payments. These mortgages can be identified using predictive analytics with machine learning and neural networks. By quickly identify these mortgages investors can spend time on more profitable loans to invest in or adjust their pricing models to accommodate the lower return they would expect for loans that might become delinquent.

https://www.iacis.org/conference/proceedings/IACIS_2022_Proceedings

Abstract: Accelerating the process of data collection, annotation, and analysis is an urgent need for linguistic fieldwork and documentation of endangered languages (Bird, 2009). Our experiments describe how we maximize the quality for the Nepal Bhasa syntactic complement structure chunking model. Native speaker language consultants were trained to annotate a minimally selected raw data set (Suárez et al.,2019). The embedded clauses, matrix verbs, and embedded verbs are annotated. We apply both statistical training algorithms and transfer learning in our training, including Naive Bayes, MaxEnt, and fine-tuning the pre-trained mBERT model (Devlin et al., 2018). We show that with limited annotated data, the model is already sufficient for the task. The modeling resources we used are largely available for many other endangered languages. The practice is easy to duplicate for training a shallow parser for other endangered languages in general.

https://aclanthology.org/2022.computel-1.8/

Abstract: Annotated data have traditionally been used to provide the input for training a supervised machine learning (ML) model. However, current pre-trained ML models for natural language processing (NLP) contain embedded linguistic information that can be used to inform the annotation process. We use the BERT neural language model to feed information back into an annotation task that involves semantic labelling of dialog behavior in a question-asking game called Emotion Twenty Questions (EMO20Q). First we describe the background of BERT, the EMO20Q data, and assisted annotation tasks. Then we describe the methods for fine-tuning BERT for the purpose of checking the annotated labels. To do this, we use the paraphrase task as a way to check that all utterances with the same annotation label are classified as paraphrases of each other. We show this method to be an effective way to assess and revise annotations of textual user data with complex, utterance-level semantic labels.

https://arxiv.org/abs/2204.00916

The main purpose of this study is to use machine learning techniques that have potential to predict breast cancer survival more accurately and can prevent unnecessary surgical treatment procedures. Using these machine learning models on genetic data has the potential to improve our understanding of cancers and survival prediction. The dataset is taken from ‘The Molecular Taxonomy of Breast Cancer International Consortium (METABRIC)’ database which contains the targeted sequencing of genomic data of primary breast cancer patients. The dataset is run through various machine learning algorithms such as Logistic Regression, KNN, Decision Tree, Random Forest, Extra Trees, Adaboost, Support Vector Machines (SVM) to predict the survival status. The results are compared to the traditional survival analysis models. This study aims to showcase that machine learning models would help accurately predict the outcomes of breast cancer prognosis, which will help survival chances by providing treatment procedures in early stages of the cancer.

https://www.iacis.org/iis/2021/2_iis_2021_312-321.pdf

Governments around the world have implemented various public health and policy countermeasures intended to slow the continued outbreak of COVID-19 disease in 2020 and into 2021. How effective are these countermeasures, and is there a causal relationship between government policy response, confirmed case counts, and deaths that would allow for better forecasting? This study aims to identify relationships between various indexes of government policy, confirmed case counts, and confirmed deaths and quantify their predictive power using vector autoregression (VAR) methods and SAS/ETS® software. Our findings show that multivariate vector autoregression and vector error correction models generally outperform univariate models for most countries. We did not find substantial evidence that including government policy response metrics in the models improves forecasting accuracy.

https://www.iacis.org/iis/2021/2_iis_2021_122-135.pdf

A. Khatri, D. Labajo, and M. Rege, “Reliability of NLP Models to Predict Human Sentiments”, in Issues in Information Systems, Vol 22, Issue 4, pp. 33-48, 2021.

Advancements in Artificial Intelligence have led to widespread adoption across various industries (McKinsey Survey 2019). It has attained a level of inferential performance that would have been otherwise challenging to achieve a few decades ago (Anyoha, Rockwell 2017). Models that perform time-series prediction, regression, facial recognition, among others have become widely accepted in various industry applications given the effectiveness and reliability of the technology. As AI continues to evolve, companies are beginning to look at AI to gain meaningful customer insight by interpreting human thoughts, sentiments, and empathy (Prentice, Catherine, Nguyen, Mai 2020). Traditionally, AI could correlate patterns from a vast collection of words but how will it overcome challenges given the distinctiveness of each person’s expressions, emotions, and experiences (Purdy 2019)? A typical sentiment analysis focuses on predicting a positive or negative polarity of a given sentence. This task works in the setting that the given text has only one aspect and polarity. A typical ML model does well in this situation. However, a more general and complicated task is to predict based on different aspects mentioned in a sentence and the sentiments associated with each one of them. Our paper will attempt to show the challenges associated with the issue of multi-polarity and the role it plays in incorrectly predicting a neutral sentiment. As AI and human lives become increasingly intertwined, this paper will attempt to test the reliability of AI to wade through the complex human sentiments from words and sentences within a contextual domain and attempt to uncover the challenges that impedes the reliability of AI to accurately infer human sentiments (Hussein, D.M.E 2018).

https://iacis.org/iis/2021/4_iis_2021_34-50.pdf

T. Le and M. Rege, “Effective feature for micro-expression recognition”, in Proceedings of IEEE 22nd International Conference on Information Reuse and Integration for Data Science, Aug 10-12, 2021.

When an emotional state is involuntarily and spontaneously delivered with low intensity and short duration, micro expression (ME) occurs. Developing from psychological perspectives to computer vision standpoints, ME has obtained huge advancements and breakthroughs. A variety of feature extraction techniques have been introduced based on different outlooks. With exclusive characteristics of ME, geometric feature learning is involved in approaching the problem in this paper. Specifically, we propose a method where dominant facial regions of interest are extracted and used to further learn spatio-temporal features with space-time auto-correlation of gradients (STACOG) technique. The facial motion features are fed into a multi-layer perceptron network for emotion classification. The combination of ROIs and STACOG captures ME with respect to geometrical property along side with the temporal aspect in three dimensional space. This puts a stress on presumably suboptimal features, making them more salient and resilient for the classification stage. The framework is experimented on three well-known, state-of-the-art spontaneous ME databases CASMEII, SMIC and SAM.

https://dl.acm.org/doi/abs/10.1109/IRI51335.2021.00057

Facial micro-expression is a subtle and involuntary facial expression that exhibits short duration and low intensity where hidden feelings can be disclosed. The field of micro-expression analysis has been receiving substantial awareness due to its potential values in a wide variety of practical applications. A number of studies have proposed sophisticated hand-crafted feature representations in order to leverage the task of automatic micro-expression recognition. This paper employs a dynamic image computation method for feature extraction so that features can be learned on certain localized facial regions along with deep convolutional networks to identify micro-expressions presented in the extracted dynamic images. The proposed framework is simple as opposed to other existing frameworks which used complex hand-crafted feature descriptors. For performance evaluation, the framework is tested on three publicly available databases, as well as on the integrated database in which individual databases are merged into a data pool. Impressive results from the series of experimental work show that the technique is promising in recognizing micro-expressions.

R. Panda, P. Sivaprakasam, and M. Rege, "A computational analysis of the impact of population and number of police officers on assault against Law Enforcement Officers", in Proceedings of 60th Annual IACIS International Conference, Oct 7-10, 2020.

Abstract: In the United States, law enforcement professionals are being killed feloniously, accidentally and assaulted in the line of duty. The algorithm proposed in this paper will help to predict the assault count based on the population size of a geographic area and number of police personnel. This paper focuses on analyzing the trends in the numbers of assaults using Jupyter Notebook and SASPy in SAS® University Edition and SAS® Enterprise Miner. A variety of methods will be used to determine the best model such as Gradient Boosting, Decision Tree, Linear Regression and Neural Network.

L. Butler and M. Rege, "Building an Analytical Model to Predict Workforce Outcomes in Medical Education", in Proceedings of 60th Annual IACIS International Conference, Oct 7-10, 2020.

Abstract: Across the United States, there is a shortage of physicians providing care in rural areas. This shortage means patients living in rural communities must travel further with fewer care options. The purpose of this study is to ultimately fill the gap in rural workforce outcomes by identifying students that are likely to practice in rural areas once they complete medical school and residency/fellowship programs. These students may be identified through use of predictive analytics techniques. By identifying these students, we can provide informational material and optional programs to further foster interest in rural care. Through techniques such as feature extraction, resampling and data imputation, we prepare data for various machine learning classifiers. These models allow us to identify features common to urban providers and rural providers. Seventy percent of rural providers were correctly identified as practicing in rural areas, while 25% of their urban counterparts were classified as rural. One characteristic difference between the groups shows rural providers have high average scores through medical school courses, while urban providers have higher standardized test scores.

T. White and M. Rege, "Sentiment Analysis on Google Cloud Platform", in Proceedings of 60th Annual IACIS International Conference, Oct 7-10, 2020.

Abstract: This project explores two services available on the Google Cloud Platform (GCP) for performing sentiment analysis: Natural Language API and AutoML Natural Language. The former provides powerful prebuilt models that can be invoked as a service allowing developers to perform sentiment analysis, along with other features like entity analysis, content classification and syntax analysis. The latter allows developers to train more domain specific models whenever the pre-trained models offered by the Natural Language API are not sufficient. Experiments have been conducted with both of these offerings and the results are presented herein.

E. Friedman, R. Kolakaluri, and M. Rege, "Benford's Law Applied to Precinct Level Election Data", in Proceedings of 60th Annual IACIS International Conference, Oct 7-10, 2020.

Abstract: This paper attempts to determine whether precinct level election data conforms with Benford's Law. In order to evaluate whether election data conform with Benford's Law, we constructed a two-part test. The first test assesses whether election data correlates with Benford's law. If the election data under study is found to correlate with Benford's law, we then subject it to the Kolmogorov-Smirnov test, which is used to evaluate more rigorous conformance with Benford's law and which aids in forensic analysis. We conclude that the frequency pattern of the first digits of the precinct level elections under study correlate strongly with the pattern predicted by Benford's Law of first digits. We also conclude, however, that the correlation is not strong enough for definitive forensic analysis.

P. Tumu, V. Manchenasetty, and M. Rege, "Context Based Sentiment Analysis Approach Using N-Gram and Word Vectorization Methods" in Proceedings of 60th Annual IACIS International Conference, Oct 7-10, 2020.

Abstract: Consumer reviews are key indicators for product credibility and central to almost all product manufacturing companies to align and alter the products to the needs of customers. Using Sentiment analysis approach, these reviews can be analyzed for positive, negative and neutral feedback. There are many techniques designed to do Sentiment analysis and opinion mining in the past on drug reviews to study their effectiveness and side-effects on the people. In this paper, an approach is presented which is a combination of context-based sentiment analysis using N-gram and tf-idf word vectorization method to find the sentiment class - positive, negative, neutral and use this sentiment class in Naïve Bayes and Random Classifiers to predict user review emotion. Our validation process involved measuring the model performance using quality metrics. The results showed that the proposed solution outperformed conventional sentimental analysis techniques with an overall accuracy of 89%.

J. Noble and H. Gamit, "Unsupervised Contextual Clustering of Abstracts", Winner of the 2020 SAS Global Forum Student Symposium.

Watch Unsupervised Contextual Clustering of Abstracts presentation on YouTube

Abstract: This study utilizes publicly available data from the National Science Foundation (NSF) Web Application Programming Interface (API). In this paper, various machine learning techniques are demonstrated to explore, analyze and recommend similar proposal abstracts to aid the NSF or Awardee with the Merit Review Process. These techniques extract textual context and group it with similar context. The goal of the analysis was to utilize the Doc2Vec unsupervised learning algorithms to embed NSF funding proposal abstracts text into vector space. Once vectorized, the abstracts were grouped together using K-means clustering. These techniques together proved to be successful at grouping similar proposals together and could be used to find similar proposals to newly submitted NSF funding proposals. To perform text analysis, SAS® University Edition is used which supports SASPy, SAS® Studio and Python JupyterLab. Gensim Doc2vec is used to generate document vectors for proposal abstracts. Afterwards, document vectors were used to cluster similar abstracts using SAS® Studio KMeans Clustering Module. For visualization, the abstract embeddings were reduced to two dimensions using Principal Component Analysis (PCA) within SAS® Studio. This was then compared to a t-Distributed Stochastic Neighbor Embedding (t-SNE) dimensionality reduction technique as part of the Scikit-learn machine learning toolkit for Python. Conclusively, NSF proposal abstract text analysis can help an awardee read and improve their proposal model by identifying similar proposal abstracts from the last 24 years. It could also help NSF evaluators identify similar existing proposals that indirectly provides insights on whether a new proposal is going to be fruitful or not.

T. Le, T. Tran, and M. Rege, "Dynamic image for micro-expression recognition on region-based framework", in Proceedings of IEEE 21st International Conference on Information Reuse and Integration for Data Science, Aug 11-13, 2020.

Illustration of four main components in the proposed method for ME recognition system. MEs in an input video sequence are first magnified using Eulerian video magnification, which helps enlarge facial movements for the succeeding feature learning phase. The output frames then go through computations for extracting features based on rank pooling called dynamic image technique. The outcome of this stage is RGB dynamic images representing features of each frame in the sequence; Certain localized facial regions are next extracted on the elicited dynamic images: forehead, eyebrows, nose, and mouth for highlighting dominant facial motions existing in the frames. A CNN model is run on the pre-processed data for emotion categorization as a final stage.

Abstract: Facial micro-expressions are involuntary facial expressions with low intensity and short duration natures in which hidden emotions can be revealed. Micro-expression analysis has been increasingly received tremendous attention and become advanced in the field of computer vision. However, it appears to be very challenging and requires resources to a greater extent to study micro-expressions. Most of the recent works have attempted to improve the spontaneous facial micro-expression recognition with sophisticated and hand-crafted feature extraction techniques. The use of deep neural networks has also been adopted to leverage this task. In this paper, we present a compact framework where a rank pooling concept called dynamic image is employed as a descriptor to extract informative features on certain regions of interests along with a convolutional neural network (CNN) deployed on elicited dynamic images to recognize micro-expressions therein. Particularly, facial motion magnification technique is applied on input sequences to enhance the magnitude of facial movements in the data. Subsequently, rank pooling is implemented to attain dynamic images. Only a fixed number of localized facial areas are extracted on the dynamic images based on observed dominant muscular changes. CNN models are fit to the final feature representation for emotion classification task. The framework is simple compared to that of other findings, yet the logic behind it justifies the effectiveness by the experimental results we achieved throughout the study. The experiment is evaluated on three state-of-the-art databases CASMEII, SMIC and SAMM.

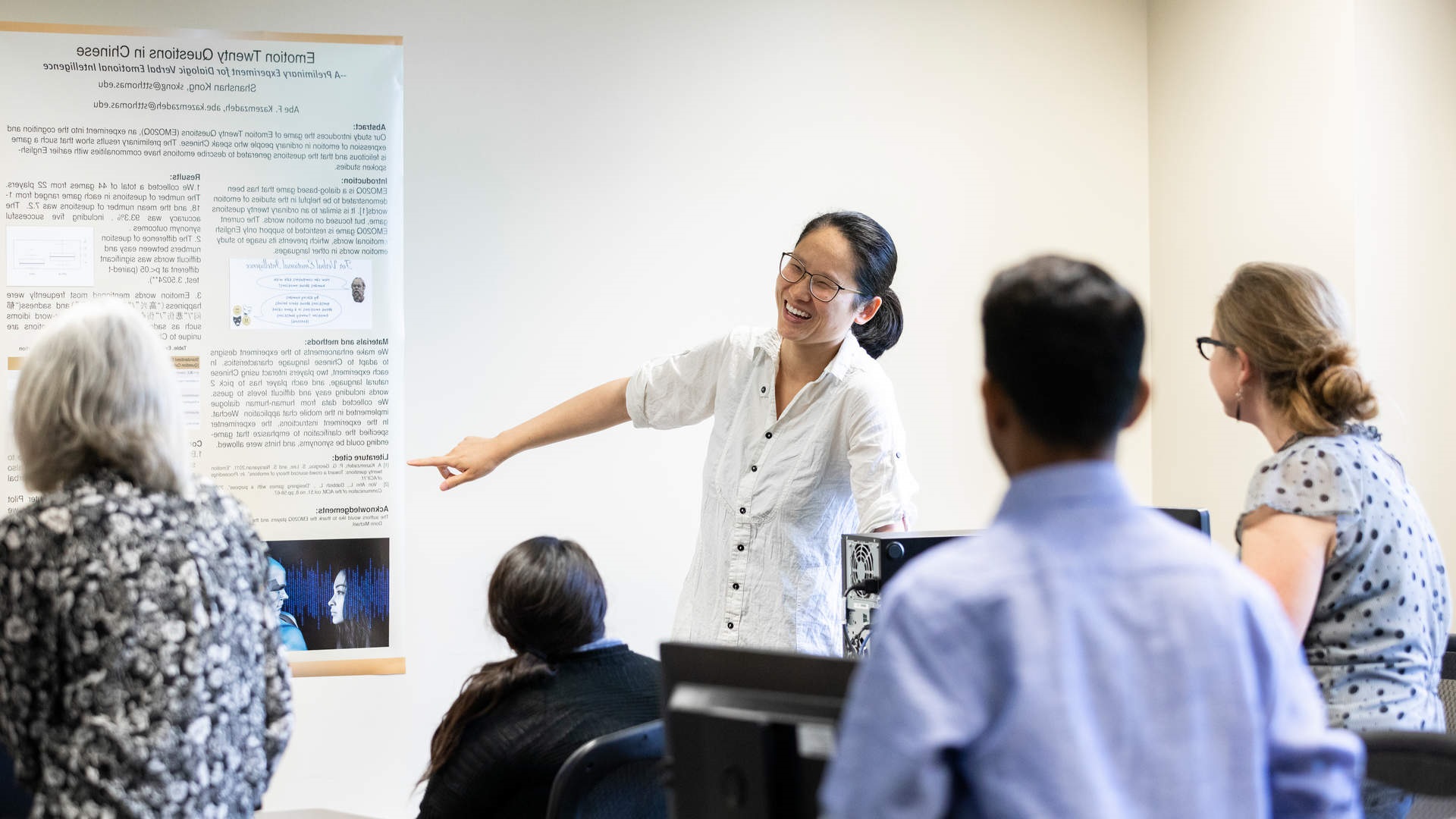

S. Kong and A. Kazemzadeh, "Emotion Twenty Questions in Chinese", presented at Congreso Internacional de Ingeniería de Sistemas (CIIS 2019), Lima, Peru, Sept 5-6, 2019.

Abstract: Our study introduces the game of Emotion Twenty Questions (EMO20Q), an experiment into the cognition and expression of emotion in ordinary people who speak Chinese. The preliminary results show that such a game is felicitous and that the questions generated to describe emotions have commonalities with earlier English-spoken studies.

B. Jackson and M. Rege, "Machine Learning for Classification of Economic Recessions", in Proceedings of IEEE 20th International Conference on Information Reuse and Integration for Data Science, Los Angeles, California, July 30th through August 1, 2019.

Abstract: The ability to quickly and accurately classify economic activity into periods of recession and expansion is of great interest to economists and policy makers. Machine Learning methods can potentially be applied to the classification of business cycles. This paper describes two machine learning methods, K-Nearest Neighbor and Neural Networks, and compares them to a Dynamic Factor Markov Switching model for determining business cycle turning points. We conclude that machine learning techniques can offer more accurate classifiers that are worthy of additional study.

K. Wu and M. Rege, "Hibiki: A Graph Visualization of Asian Music", in Proceedings of IEEE 20th International Conference on Information Reuse and Integration for Data Science, Los Angeles, California, July 30th through August 1, 2019.

Hibiki's Graph Visualization is an interactive tool for exploring music albums and artists. Its interface includes a (a) main panel that renders nodes and relationships, a (b) taxonomy panel that gives node counts, a (c) information panel that details more information on selected nodes and (d) a toolbar for extra functions.

Abstract: Creating a visualization for a specific subdomain is an arduous task since most commercial visualization tools are often written in a way that allows them to be applicable to multiple subject domains. These tools are certainly powerful but inherently weaker since they were not written with a specific subject domain in mind. Thus, many researchers may want to create their own visualization. The goal of this project is to create a Neo4j database and an interactive web interface for a dataset that covers the intricacies of the East Asian Music scene, primarily focused on Japanese music. This paper serves as documentation to help other authors understand the processes involved when designing and creating similar tools. We break the project down into 3 separate components. First, we introduce the fundamentals of a Neo4j Graph Database and data mapping design decisions. Next, we explore what an ETL process looks like and how to implement it using Ruby libraries. Finally, we look at the design of the graph visualization software, it's components and key design decisions. We end the discussion with some analysis of the visualization's effectiveness to provide information and how to improve computational efficiency of the visualization.

R. Mbah, M. Rege, and B. Misra, "Using Spark and Scala for Discovering Latent Trends in Job Markets", in Proceedings of 2019 The 3rd International Conference on Compute and Data Analysis, Maui, Hawaii, March 14-17, 2019.

Abstract: Job markets are experiencing an exponential growth in data alongside the recent explosion of big data in various domains including health, security and finance. Staying current with job market trends entails collecting, processing and analyzing huge amounts of data. A typical challenge with analyzing job listings is that they vary drastically with regards to verbiage, for instance a given job title or skill can be referred to using different words or industry jargons. As a result, it becomes incumbent to go beyond words present in job listings and carry out analysis aimed at discovering latent structures and trends in job listings. In this paper, we present a systematic approach of uncovering latent trends in job markets using big data technologies (Apache Spark and Scala) and distributed semantic techniques such as latent semantic analysis (LSA). We show how LSA can uncover patterns/relationships/trends that will otherwise remain hidden if using traditional text mining techniques that rely only on word frequencies in documents.

R. Mbah, M. Rege, B. Misra, “Discovering Job Market Trends with Text Analytics”, in Proceedings of the International Conference on Information Technology, Bhubaneswar, India, Dec 21-23, 2017.

Abstract: Due to the current dynamic and competitive nature of job markets especially the IT job market, it has become incumbent for organizations and businesses to stay informed about the current job market trends. Staying current with trends entails collecting and analyzing huge amounts of data which in the past, has always involved a great deal of manual work. In this paper, we present out work on collecting, analyzing and visualizing local job data using text mining techniques. We also discuss technologies used such as: cron jobs for automation; Java for API data collection and web scrapping, Elasticsearch for data subsetting and keyword analysis, and R for data analysis and visualization. We expect this work to be of relevance to a diverse range of job seekers as well as employers and educational institutions.